Machine-Learning Based Rightsizing: Is it worth it?

Back in March, we shared an exciting story of creating a POC for a machine-learning-based infrastructure rightsizing mechanism.

Naturally,

such an interesting initiative and promising results could not be put aside,

and we went on working on the tool to see how far we could potentially get, and

– of course – if all our work is worth the benefits it could bring.

From POC to a Product

The

initial, POC, version of Maestro Cost Advisor (that’s how we called it) was

actually quite a simple one in terms of the functionality: it took the virtual

machines performance metrics for 4 days, analyzed the CPU and memory load, the

timelines, and suggested the following actions to the instances:

- Scale up

- Scale down

- Shutdown

- Schedule

The mechanism analysed the real load on the instances and

suggested new instance types based on the 90’s percentile for each parameter.

The approach was good enough to prove that the mechanism

would work, but definitely not enough to become a business tool.

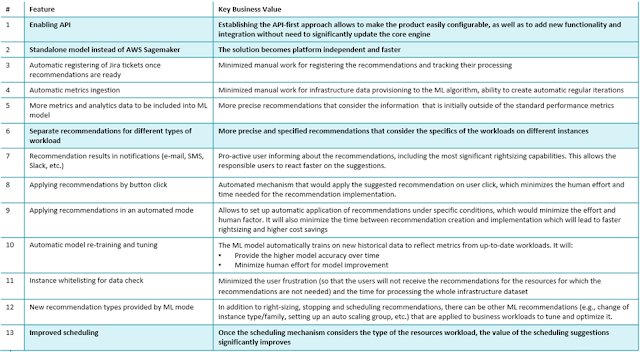

Thus, we had a brainstorming session to suggest ideas about

how to turn our POC into an enterprise-ready product. Based on our experience

in working with cloud, as well as on the dialogs with our customers, we

highlighted 13 possible features that could be added within a reasonable time.

And out of them, we highlighted 4 that could be used to create a Minimum Viable

Product (MVP):

Planning a Product

When selecting features for an MVP, we focused on the main

tasks, which definitely needed to be resolved for our mechanism to become a

real value for any business.

·

Enabling API. API-first approach enables quick configuration of the application,

as well as gives the possibility to quickly integrate it with existing own or

third-party systems to bring more value to the customer.

·

Standalone model. Maestro Cost Advisor POC was

built based on AWS Sagemaker, as it was the fastest way for the team to

implement the ML. Even though the tool can potentially work with data from any

cloud (and uses Azure in the POC), having its “brain” in AWS could be a

significant drawback – for both those who just don’t want to use AWS, and those

who want to have all mechanisms within their existing infrastructures.

Thus, we need to rebuild our tool in such a way that it could be deployed on

any customer-defined platform, either Cloud or on-premise.

· Workload-specific recommendations. Any enterprise has multiple environments and workload types. Production and testing, databases and load balancers – each instance has its own tasks and performance specifics. Naturally, the recommendations should take this all into account to bring a real value to the business.

Is it worth it?

Putting effort into creation of a minimum viable product is a

serious decision. It should be balanced carefully considering the effort needed

for implementation and the possible benefits.

We decided to check this by calculating the possible Return

of Investment (ROI), based on a large enterprise infrastructure where we would

be able to try our product, once developed, in action.

Within the calculation, we took into account the time needed

for finalizing the ML-based recommendation mechanism and integrating in with

Maestro (3 months), Maestro licence (based on the covered infrastructure cost),

and the estimated cost of a large-scale enterprise infrastructure.

The result looks more than promising:

Still, at the moment, the mechanism is considered to be a

future part of Maestro, although the implementation is not a top priority task

at the moment.

This means, that once the development is over, the price of

introducing the machine learning to the FinOps processes of Maestro customers

will be even smaller – and the ROI will grow tremendously.

Comments

Post a Comment